Crawling Basics

How does a crawler fetch “all” Web pages? Before the advent of the Web, traditional text collections such as bibliographic databases and journal abstracts were provided to the indexing system directly, say, on magnetic tape or disk. In contrast, there is no catalog of all accessible URLs on the Web. The only way to collect URLs is to scan collected pages for hyperlinks to other pages that have not been collected yet. This is the basic principle of crawlers. They start from a given set of URLs, progressively fetch and scan them for new URLs (outlinks), and then fetch these pages in turn, in an endless cycle. New URLs found thus represent potentially pending work for the crawler. The set of pending work expands quickly as the crawl proceeds, and implementers prefer to write this data to disk to relieve main memory as well as guard against data loss in the event of a crawler crash. There is no guarantee that all accessible Web pages will be located in his fashion; indeed, the crawler may never halt, as pages will be added continually even as it is running. Apart from outlinks, pages contain text; this is submitted to a text indexing system to enable information retrieval using keyword searches.

It is quite simple to write a basic crawler, but a great deal of engineering goes into industry-strength crawlers that fetch a substantial fraction of all accessible Web documents. Web search companies like AltaVista, Northern Light, and Inktomi publish white papers on their crawling technologies, but piecing together the technical details is not easy. There are only a few documents in the public domain that give some detail, such as a paper about AltaVista’s Mercator crawler and a description of Google’s first-generation crawler.

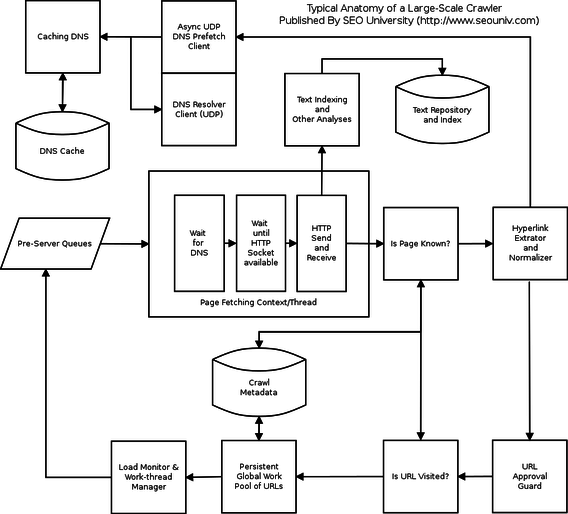

Figure: Typical Anatomy of a Large-Scale Crawler, By SEO University

The central function of a crawler is to fetch many pages at the same time, in order to overlap the delays involved in

1. Resolving the hostname in the URL to an IP address using DNS

2. Connecting a socket to the server and sending the request

3. Receiving the requested page in response

together with time spent in scanning pages for outlinks and saving pages to a local document repository. Typically, for short pages, DNS lookup and socket connection take a large portion of the processing time, which depends on roundtrip times on the Internet and is generally unmitigated by buying more bandwidth.

The entire life cycle of a page fetch, as listed above, is managed by a logical thread of control. This need not be a thread or process provided by the operating system, but may be specifically programmed for this purpose for higher efficiency. "Page Fetching Context/Thread" starts with DNS resolution and finishes when the entire page has been fetched via HTTP (or some error condition arises). After the fetch context has completed its task, the page is usually stored in compressed form to disk or tape and also scanned for outgoing hyperlinks (hereafter called “outlinks”). Outlinks are checked into a work pool. A load manager checks out enough work from the pool to maintain network utilization without overloading it. This process continues until the crawler has collected a “sufficient” number of pages. It is difficult to define “sufficient” in general. For an intranet of moderate size, a complete crawl may well be possible. For the Web, there are indirect estimates of the number of publicly accessible pages, and a crawler may be run until a substantial fraction is fetched. Organizations with less networking or storage resources may need to stop the crawl for lack of space, or to build indices frequently enough to be useful.